The Agent Maturity Model

How far we are from human-like Agents and a roadmap to get there

TL;DR

AI Agents need to perform 5 things to be comparable to humans:

Do things.

Do things better, by learning.

Reason over weeks and months of time.

Collaborate with non-agents towards outcomes.

Have autonomy within resource constraints.

What is an agent?

There’s a lot of debate right now on what an AI agent actually is.

Today’s definition of agents are an LLM with reasoning, and tool-calling. While this appears to act like a human does, this couldn’t be further from the what non-programmers expect. While you could imagine these systems getting better, faster, and cheaper—that simple framework will never compare to human agents.

We suggest that this is a functional view of a agent-like system, not a systematic view required for relating AI agents to humans. The framework we propose will redefine the term “Agent” and break down a conceptual model for what it will take to get to an equivalent to human agency by moving entire cognitive tasks onto computers holistically.

So here’s our first pass to share our thinking, terminology, and full maturity model we’ve adopted while building our Agent platform, Tarka.

A layered approach

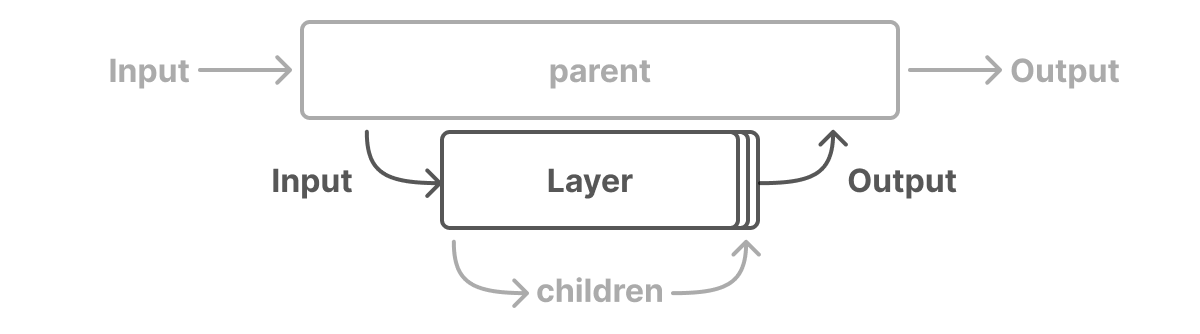

Taking inspiration from the OSI model of networking, let’s start with our building block: A Layer

A Layer is a logical separation from other layers above and below it. It receives input and returns output. The layer itself doesn’t usually understand the full context of the layer above it (the parent), but just enough to do its work, often by creating and managing lower layers (children). On top of input, each layer has some unique properties that separate it from other layers.

Usually multiple duplicates or different instances of the same layer make up the work of the parent layer. A better contextual visual of a single layer might be this:

The Agent Maturity Model (AMM)

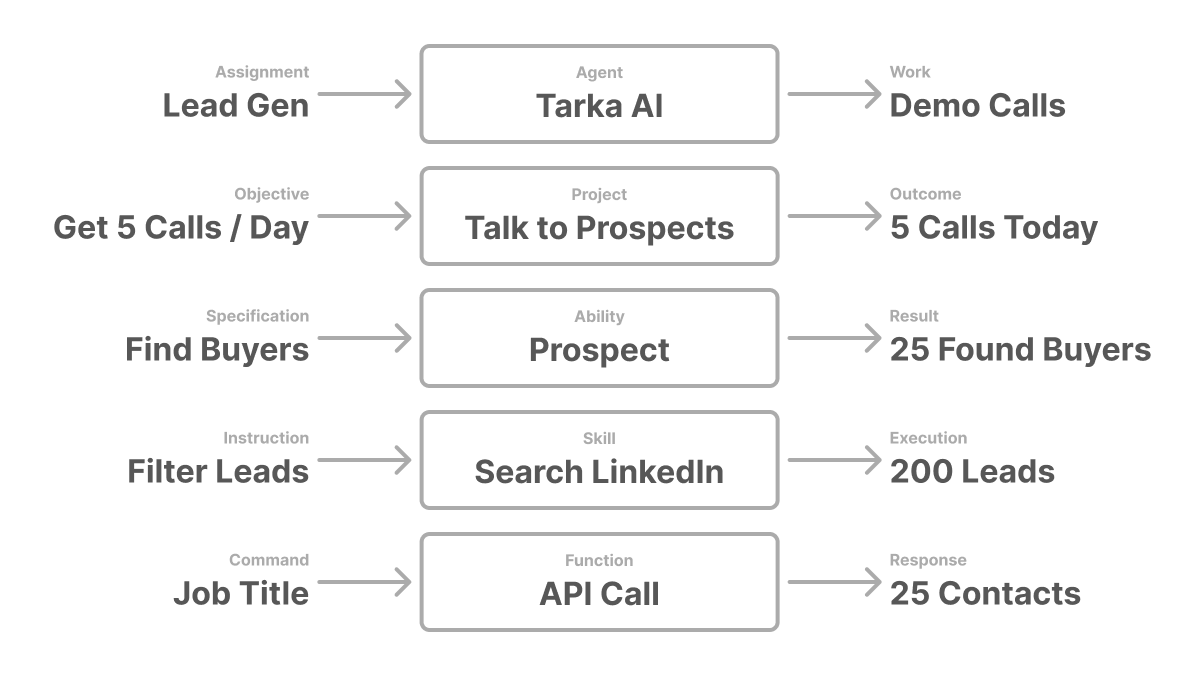

The layer construct allow us to conceptually build a 5-layer system that makes up an Agent and construct unique grammar for the entire system that we can use as a shared glossary.

Let’s break each layer down, starting from the bottom.

Throughout this, let's compare it to how humans themselves mature.

I have 2 young children (5 and 7 years old) and even though I’m not a professional teacher, I am often reminded how simple things I take for granted can be quite difficult. Let's look at my fleshy little agents along the way.

Functions

fka tools or API calls

The first layer (starting from the bottom) is the most simplistic building block: A Function.

They are black boxes to the agent—so from the agent’s perspective they only appear to work or not. It might be idempotent, but it might not. Importantly a Function cannot reason.

A Function is directed by the Command input and returns a Response output. Used by Skills, Functions are the most granular operation, usually a single service (code) or API call that can be run in sequence or parallel with other Functions.

If you’re interested in building AI agents, MCP is probably the leading Open-Source implementation of this layer. Read more: https://modelcontextprotocol.io

In the real world, Functions are things like inanimate objects. They are tools that Agents use to do things. A chair can be pulled out from under a table to sit on or pushed under the table to clean up. It can also be turned upside-down to be used as a fort—or to store toys.

For my kids, they’re learning how to brush their teeth. They’re learning how a toothbrush works and different types of toothpaste—both Functions. They don’t understand how the molecular layer of toothpaste works to clean our teeth, but they don’t need to in order to get the desired result from the toothpaste.

Learning functions? Yes! Agents develop their own set of Functions from the vast array of the possible. How? Through skills.

Skills

fka actions, tasks, activities, steps, memory, or stories.

Skills are the second layer that makes up an AI agent—and one that most “AI agents” currently do not have. It takes no reasoning to execute and, to most LLMs models, looks like a functional “tool” call. Skills make up a composite set of Functions that are joined together by an Instruction input to deliver an Execution output. Cognitive reasoning is not required.

Agents may turn reasoning into repeatable Skills with code workflows for execution time similar to how humans “crystallize Intelligence”—also called System 1 processing in the Dual-process theory. They are things that we take for granted, but each had to develop at one time. They’re usually functional even with neurocognitive impairments such as ADHD or dementia—but some may never develop them.

The reason we’ve added this as a second layer separate to Functions is that it can be improved upon. An Agent, just like a human, has to be able to develop its own Skills. It’s “saved” into code and optimized (driven by the three pillars of cost, speed, and quality for the desired use case).

To “get ready for bed” my kids have to do different Skills like “Take a shower” or “Brush teeth” or “calm our bodies down”. These all take cognition and little variables can easily throw off a routine Skill (what happens when there’s no hot water or the toothpaste is empty?) as they face Functions they don’t have yet (buying toothpaste) or don’t yet know how to develop new skills through reason (get toothpaste from another bathroom).

Importantly they can improve the “brush teeth” skill over time. They can create “conditional logic” and save it so they don’t spend mental load on it in the future.

So should all Agents be able to code? Yes! All agents should have the “learn” Skill to write and save code. Just like how human agents can improve their own Skills, AI Agents need to be able to improve their Skills—their substrate is just code. How? with the next Layer: Abilities.

Abilities

fka reasoning, workflows, processes, state, or chains

Abilities are the largest unit of work that a single Agent can complete independently—usually with reasoning and as a non-deterministic workflow of multiple Skills. It receives a Specification input and delivers a Result output. This is what current AI is simply calling “reasoning”.

In humans, this is “Fluid Intelligence” or System 2 processes in the dual-process theory. It is executive cognition that requires mental memory, cognitive flexibility, and inhibitory control that all happens in the prefrontal cortex.

Additionally, just like traditional code is saved into workflows or processes, Agents can be optimized to reason through their Skills—to choose the right Skills in the moment to produce a result. If an Agent is performing the same set of Skills in a flow, then the Agent should consider crystallizing that intelligence into deterministic code.

If you’re interested in building AI agents, Letta (fka MemGPT)’s State is probably the leading Open-Source implementation of this layer. Read more: https://docs.letta.com/stateful-agents

Let’s look at how my kids “Get Ready for Bed” on their own. This requires high-level reasoning and knowledge to create a workflow to stitch them together depending on different contexts. My kids each reason through the process differently. My son prefers to eat a red vitamin before a shower and after he brushes his teeth while my daughter prefers to shower, eat an orange vitamin, then brush teeth.

From an agent perspective, the Ability is the same, as the results are the same. But their reasoning and workflow are very differently encoded.

What about chains of chains? Chains of Abilities? Abilities of Abilities? We just call those Abilities—they’re no different—unless the Agent cannot accomplish it by itself. Then it’s called a Project.

Projects

fka as programs or plans

A structured collection of work comprising a series of Abilities aimed at reaching a specific Outcome output based on its Objective input. Importantly, Projects require outside collaboration—for example between Agents or things outside of their control (humans, systems, etc) to accomplish Outcome. When within a single agent without an interface to others, it can appear intrinsic but cannot reach an Outcome on its own.

If your agent requires an extended period of time or is waiting on additional input, then it’s a good sign it’s operating a Project—not just a self-contained Ability.

We have yet to find a leading provider of the Project level. If you know of one, we would love to review them. The closest thing we’ve found is different attempts at Multi-Agent Systems (eg: https://docs.letta.com/guides/agents/multi-agent), but this is missing non-agent operations.

The reason my kids “get ready for bed” at a certain time is to keep them in a routine and make our lives easier. They can then wake up happier and be productive at school or play the next day. So their Project for getting to bed is to “continue developing” where the Objective resets daily—ie “learn a little bit tomorrow”—and the Outcome depends on the success of their “get ready for bed” Ability’s Result the night before. If they do not correctly get their Result of 8 hours of sleep then they’ll be cranky and less likely to “continue developing” the next day.

So with outside collaboration, we just need one more thing—autonomy.

Agents

fka workers, employees, entities, people, or actors.

An individual entity with autonomy by an Assignment input that outputs Work product. Responsible for sharing resources between Projects that execute Abilities to accomplish Work. Can communicate (and interact) with other Agent(s). Has autonomy (decision making authority) and control over their own Projects within their Assignments. They have a set of Skills that they have learned and developed across their entire experience. They deftly can handle changing context, vague assignments, and random variables to varying degrees of success, often based on resource constraints (ie: capacity).

Building an autonomous layer for AI? We’d love to evaluate it.

My kids are Agents. They have autonomy (as they learn Abilities, they’ll get more Assignments and manage more Projects—every day). Their current Assignment is to learn to be the best human they can be within our family’s “child rearing” budget (resources).

So where does this leave us?

Putting it all together

We now have a full-view maturity model of what layers it takes to reach agency.

So what does a real world AI agent look like? Let’s look at an example through the lens of “Go to Market”, where Tarka is building today:

Today, so-called “agents” can “reason” through a set of “tools” based on “context”. While this gives the appearance of agency, it’s a very limited definition of such—and is very far from human agency.

It leaves open the huge gap of learnable Skills, adaptable Abilities, outcome-driven Projects, and autonomous Agents that humans perform that completely under delivers what Silicon Valley has rushed to deem “agents”. In short: We’re still in the toddler stage of AI agents.

Beyond standalone agents

But the world isn’t just made up of individual agents. Agents can work together and be coordinated—just like humans. That’s why we’ve envisioned a future based on Organization Design that encompasses 3 layers above agents:

Teams

fka. groups, collectives, squads, crews, rosters, clubs, or agencies

A collaborative unit within a Company, made up of Agents who work together on Projects that are driven by a Purpose (input) and produce Product (output)—ideally contributing to the company’s objective (ie. Value creation). Usually led by a specific manager or lead/head Agent, but not always.

My family is a Team. It’s made up of the children agents and the parent agents. We collaborate (and plenty of times don’t), but we’re sharing Purpose “Thrive” (input) and working towards the same Product of “Survived 2025” (output).

Companies

fka. organizations, clans, firms, businesses, corporations, or cooperatives

A distinct organization that uses 1 or multiple Teams working towards a unified mission and leverages shared resources. It is driven by Resources (input) and returns Value (output) It functions within the Ecosystem.

My family is superseded by my lineage to form the Moore Clan. The current grandparents have their own Team and often interact within our company. The entire family tree is a company that has constantly withstood the test of time within an Ecosystem.

Ecosystems

fka. markets, industries, or environments

The broad competitive environment where multiple companies vie for market share, resources, and strategic advantage by creating and capturing value. It has constraints in the form of Environment input and produces a collective value “GDP” as output. There are many Ecosystems, all overlapping, within the entire World.

Another human example

For another fun example, how do you teach someone, with just written instructions, how to make a PB&J sandwich? If they were born yesterday, it’s actually quite difficult:

Where does [agent-company] fit?

We’d wager that all AI agents today are not up to our standard of “Agent” in the Agent Maturity Model definition. We’ve only begun to scratch the surface of AI agents and so far none are autonomous and most certainly do not build off of Projects or even Abilities. While GPT-4o and similar “reasoning models” offer glimpses into reasoning Abilities, they’re often integrated directly into functions and then called “agents” (ie: LLMs that can “call tools”).

While “natural language reasoning” is one approach to reasoning, it’s not actually what we consider cognitive reasoning, but better thought of as chained prompting. Why? It has to do with how language is encoded.

So what?

AI is Tech’s “Quartz Crisis” moment. VC-funded companies are throwing stuff at the wall to see what sticks. But intelligence, like human agents, takes a long-term strategic view to get it right.

In true Silicon Valley fashion the market has jumped to the wrong definition too quickly and has over-promised and under-delivered.

We don’t suggest that development should stop or slow down—just that there is a large difference between the written word and the human-experience we’re emulating with LLMs.

So lets stop just throwing dumb things together and hope for the best. Let’s start delivering with intentionality and delivering intelligence for agentic work. Let’s get this right.

Related readings

Anthony Diamond’s Guide to Identifying High-Leverage AI Agent Applications

Julian Jaynes’ The Origin of Consciousness in the Breakdown of the Bicameral Mind

Related topics

Last updated March 1, 2025

All feedback and criticism is welcome.

Thanks to A and E for teaching me how to develop agency.